Visual Analysis by PolygrAI

An Innovative Approach Towards Human Behavioral Analysis

Introduction

Visual behavioral analysis is transforming how organizations assess truthfulness authenticity and hidden intentions. On our AI interviewer platform analyzing facial expressions eye movements micro expressions and subtle body language cues no longer relies on subjective human judgment. Instead our visual intelligence engine distills complex human behaviors into clear data driven insights that power smarter hiring compliance screenings risk assessments and more. Here innovation meets intuition for unprecedented behavioral clarity.

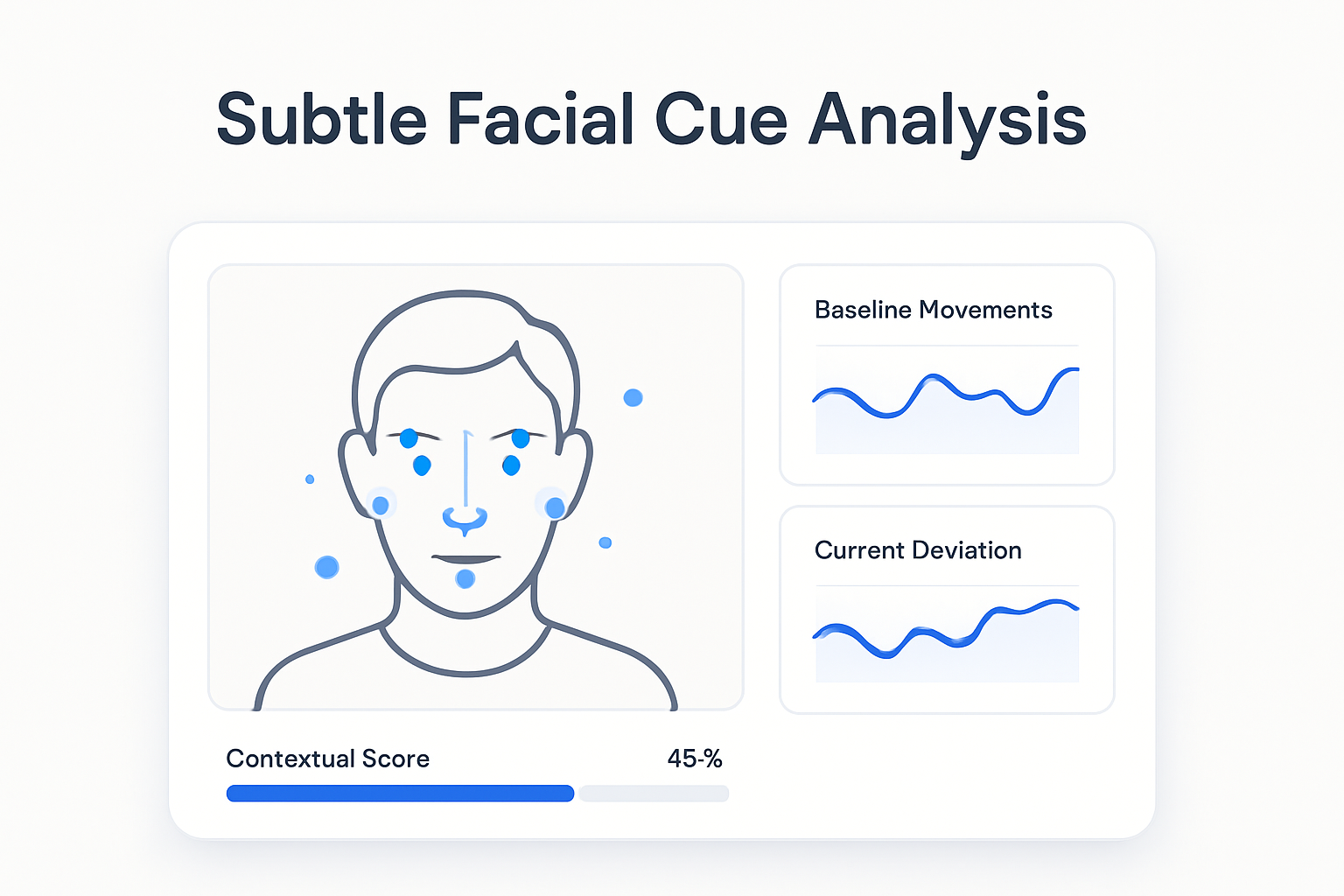

Subtle Facial Cue Analysis

Our system measures minute facial movements such as eyebrow twitches and lip tensions and places them in context. By comparing these tiny shifts against each person’s natural baseline we reveal deviations that may signal stress confidence or hesitation. This is not about catching lies but about giving your team an objective uplift to human judgment.

Eye Movement Decoding

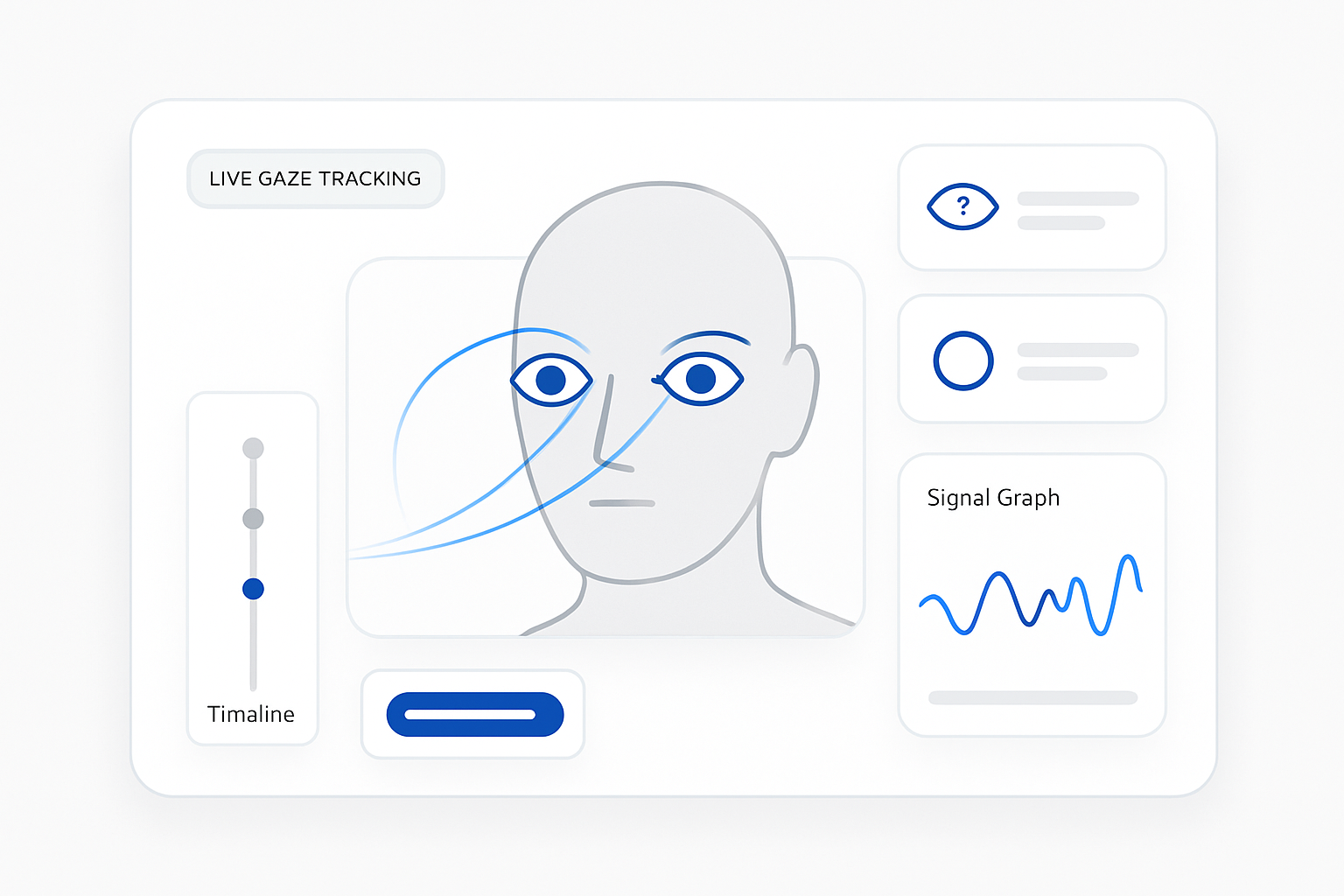

Eye movements expose attention shifts cognitive load and moments of discomfort. Our computer vision algorithms track gaze patterns in real time and flag anomalies linked to concealed truths or confusion. The result is a richer understanding of authenticity and engagement in every interaction.

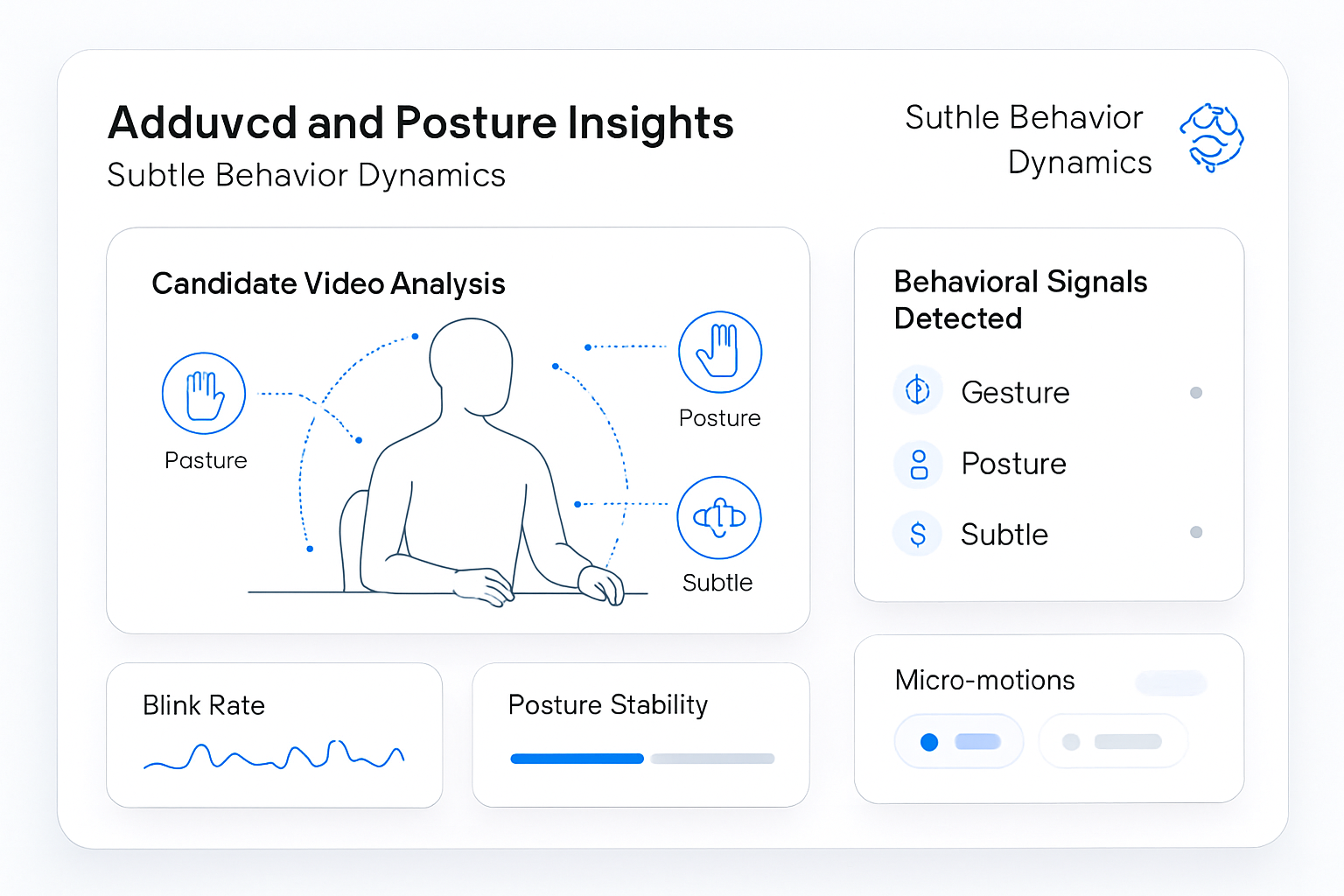

Subtle Behavior Dynamics

Beyond obvious cues, our deep learning engine detects nuanced behavioral fluctuations such as blink rate variations and micro shifts in weight distribution. By weaving these signals together, you gain a holistic profile of honesty and emotional state—critical for high-stakes decisions.

Visual Behavioral Analysis in AI Interviews

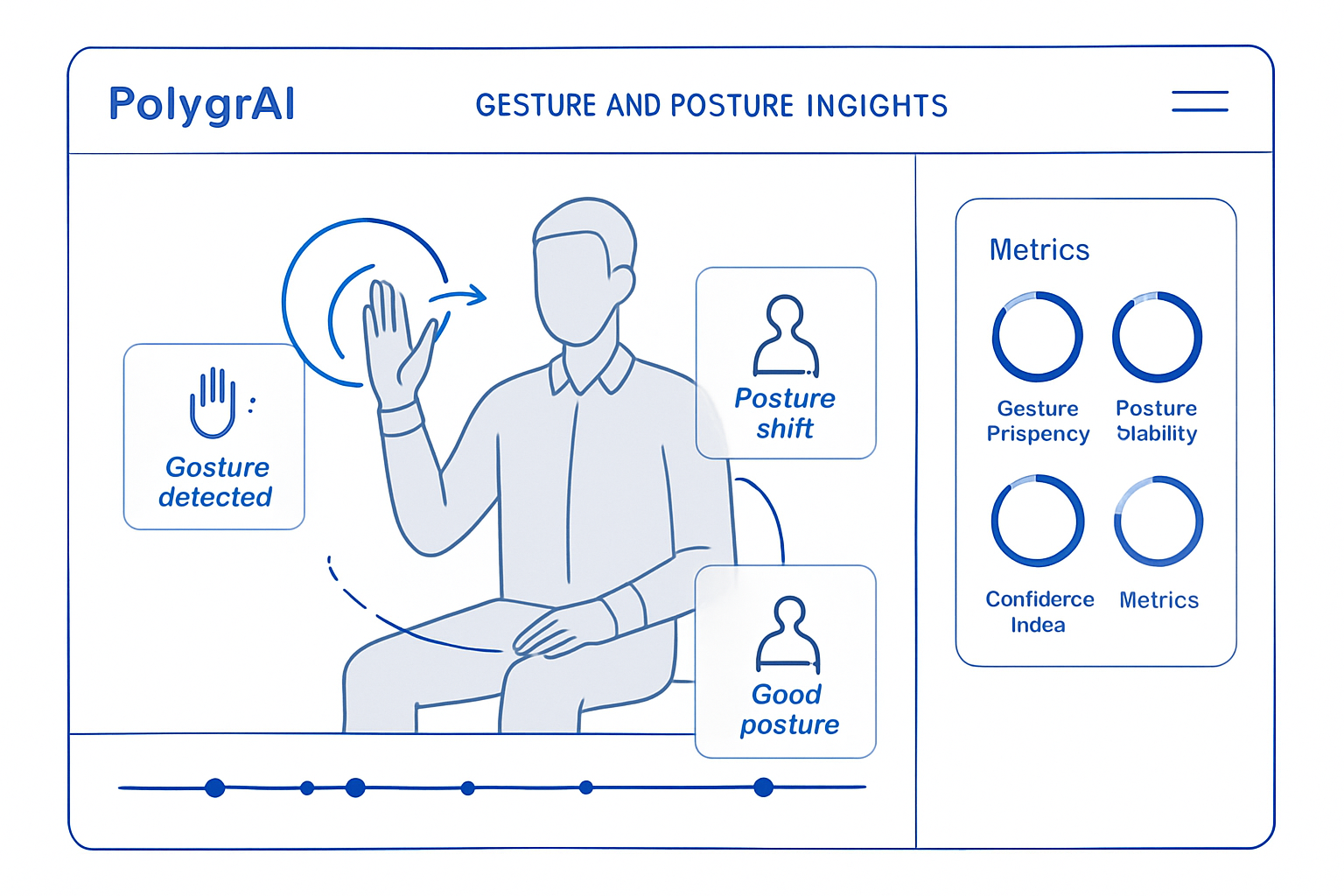

Every day billions of interactions hinge on nonverbal communication as facial micro expressions gestures posture and eye movements carry layers of meaning that human observers often miss. PolygrAI elevates these rich signals into data science to power an AI powered polygraph that learns from subtleties invisible to the naked eye. High resolution video feeds are processed frame by frame to extract behavioral markers such as resting pupil size blink frequency micro smile asymmetry and more so that each movement becomes a clear indicator of truthfulness or intent.

At the core of our visual intelligence engine is a suite of convolutional neural networks trained on ethically sourced consented data. Facial expression analysis modules detect action units defined by the Facial Action Coding System while temporal models isolate fleeting micro expressions and pose estimation algorithms map skeletal joints for gesture and posture analytics. Eye tracking subsystems calculate fixation durations and saccade patterns. These streams of insight feed a decision engine that applies rigorous statistical analysis to every behavioral fluctuation.

PolygrAI’s commitment to ethical data collection means every participant is fully informed and fairly compensated. Rigorous debiasing ensures representation across ages ethnicities and contexts and production data is never used to retrain models so privacy remains inviolate. As each interview unfolds our AI interviewer ingests video responses in real time merges visual metrics with vocal and linguistic analysis and delivers a unified dashboard of truthfulness scores emotional stability indicators and attention metrics.

Organizations benefit from faster candidate screening with enterprise grade accuracy interviews that scale without additional resources and audit ready analytics for compliance. Looking ahead continuous learning will refine cultural and contextual sensitivity and fusion with other modalities promises even greater precision. Soon real time authenticity alerts will integrate directly into video platforms, transforming how trust is detected and elevating decision making at every level.

OVERVIEW OF OUR TECHNOLOGY

Multi-Modal Analysis Engine

Visual

Our system meticulously analyzes facial micro-expressions, eye movements, gestures, and posture changes alongside subtle body language cues to detect behavioural fluctuations.

Vocal

Our features provide a comprehensive view of the behavioural dynamics in your video session. Each feature explains a key metric and how it helps you make a better, more confident decision.

Linguistic

Leveraging validated psychological metrics, linguistic pattern analysis, and vocal and facial behavior cues, we identify subtle indicators of deception across assessments.

Psychological

Our system applies predictive psychometric modeling, semantic emotion analysis and subtle behavioral cue detection to infer personality drivers and relational dynamics.

Introducing PolygrAI Interviewer

Elevate your hiring process with real-time behavioral insights, seamless video integration, and AI-driven risk scoring for confident candidate decisions.

-

Get micro-expression, voice-tone, and sentiment insights as you interview.

-

Learns each candidate’s normal behavior patterns to pinpoint subtle deviations under stress.

-

Receive post-interview transcripts, risk scores, and emotion summaries for easy review.

Some of the other use cases it can be used within

FAQ

Frequently Asked Questions

Our engine captures hundreds of data points per second—from micro eyebrow raises and blink rates to head tilts and shoulder shifts—and maps them against psychometric models. You get clear metrics (e.g. stress index, engagement level) rather than raw video, so you know what changed and why it matters.

Indicators are statistically validated on diverse, consent-driven datasets. While no single cue is infallible, combining dozens of synchronized signals yields a reliable risk score—one you can calibrate to your own tolerance for false positives or negatives.

We minimize bias through balanced training samples spanning ages, genders and ethnicities. Ongoing audits detect and correct imbalances. Plus, every report includes confidence intervals so you see when the model is less certain and might warrant human review.

Just a standard webcam or smartphone camera and a stable internet connection. Our algorithms adapt to varied lighting and backgrounds, so there’s no need for specialized hardware.

All video data is encrypted in transit and at rest under SOC-2 and HIPAA standards. Raw footage never leaves our secure servers, and visual metrics are stored separately from personal identifiers. You retain full control over retention and deletion policies.

Each interview generates a concise dashboard showing:

Overall truthfulness and stress scores

Time-series graphs of key indicators (e.g. engagement over each question)

Alerts for responses that fall outside your defined thresholds

Downloadable summaries for HR or compliance audits

We offer lightweight SDKs and REST APIs that slot right into most video-interview or ATS platforms. A few lines of code and you’ll start receiving visual analysis alongside your existing questions—no UI overhaul required.